Adversarial Attack, Defense, and Verification

As the increasing deployment of machine learning models, one natural question is whether they are secure against diverse attacks. Existing studies show that by adding small perturbations to input data, a machine learning model can be fooled to falsely classify an object (e.g., dog as cat).

Our research focuses on building new adversarial attacks to raise attention on the security of machine learning models (ICASSP’21), designing new robust models to defend against adversarial attacks (AAAI’21), and building GPU-based verification tools to efficiently verify the robustness of machine learning models against adversarial attacks (ATC’22).

Reference Work

Private Machine Learning with Zero-Knowledge Proof

Privacy raises increasing attention in recent years. Users would like to protect their data privacy while still gaining benefits from commercialized machine learning models (e.g., recommendation systems). Companies want to keep their model private while still persuading third-parties on the accuracy of their models.

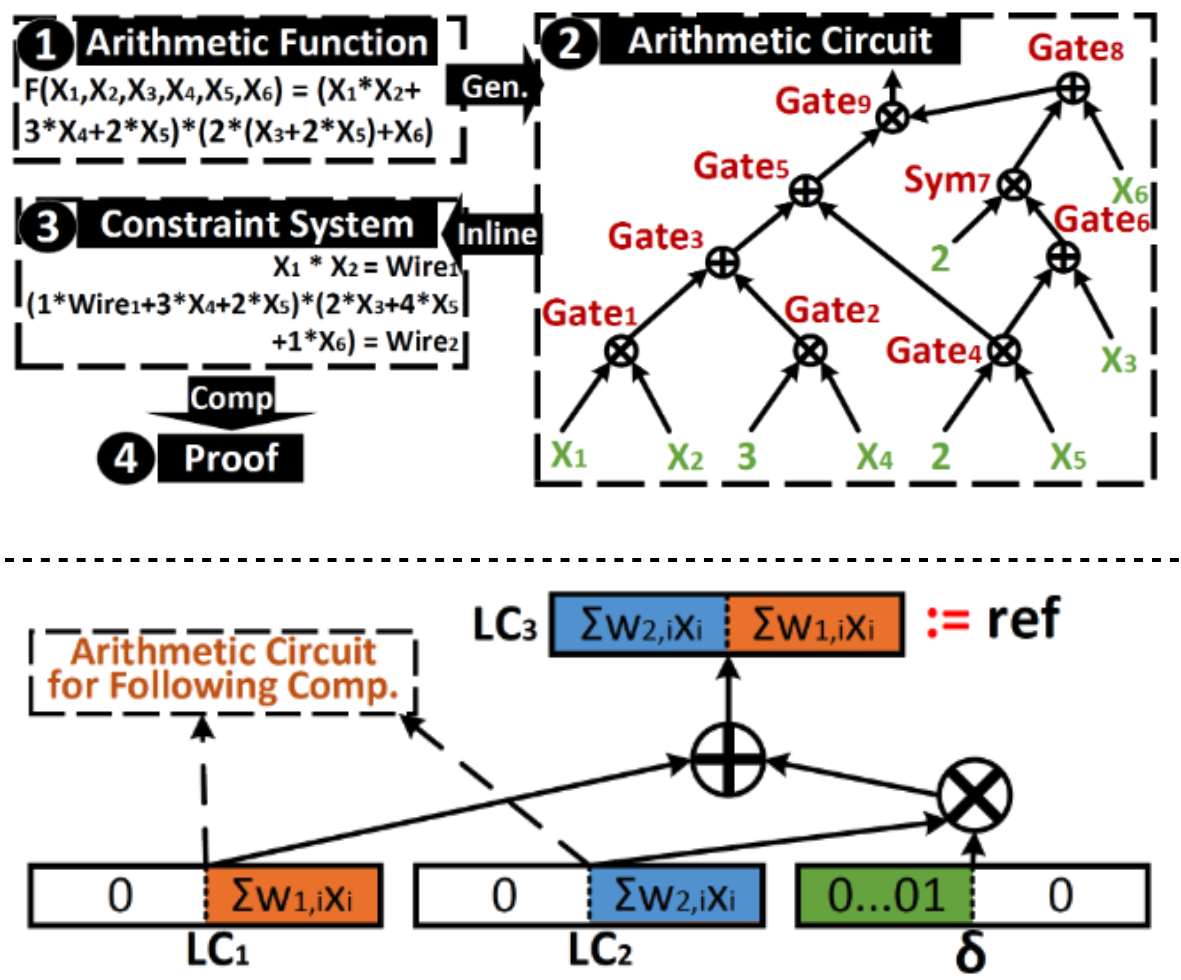

To tackle this problem, we build systems for private machine learning with Zero-Knowledge Proof (ZKP). We first build a compiler, ZEN, to efficient support deep neural networks with zero-knowledge proof. Then, we design ZENO, a type-based optimization framework for zero-knowledge neural network inference.

Reference Work

ZEN : Efficient Zero-Knowledge Proofs for Neural Networks.

Preprint [paper]ZENO : A Type-based Optimization Framework for Zero Knowledge Neural Network Inference. [ASPLOS’2024]